Intro

With the goal of becoming more data-driven, we have expanded our suite of acceptance tests with a new type of test whose main purpose is to collect metrics for mongo transactions under various deployment scenarios for changes targeting the 2.5 and develop branches.

The collected metrics will help us establish a baseline for transaction performance and provide an early warning sign if a change to the code-base suddenly causes transaction times to spike.

Furthermore, we have now gained an automated way to compare juju’s performance against mongo 3 and mongo 4. The same mechanism will also enable us to assess any performance gains that can be obtained from the upcoming work on server-side transactions with mongo 4.

How it works

Each test initially bootstraps a new controller with either juju’s built-in mongo version or an externally provided mongo snap (currently internally hard-coded to mongo 4.0.5). Tests may also request a particular mongo profile to be used for the bootstrapped controller.

Once the controller is up and running, the test code attempts to deploy the bundle that was provided as an argument to the test. Bundles can be:

- local, specified as an absolute link to the acceptance test repository

- remote, specified as a charm-store URI

While the deployment is in progress, the test keeps track of the total deployment time while at the same time collects the execution time and number of retries for each executed transaction. This is achieved by turning

on trace logging for the juju.state.txn module and grepping the logs for transaction-related entries.

Once the bundle is successfully deployed, the metrics get pre-aggregated and the following stats are calculated for each metric:

-

transaction time:

- min

- max

- mean

- median

- stddev

- total

- count

-

transaction retries:

- min

- max

- mean

- median

- stddev

The metrics are then shipped to an influxdb instance. Before shipping, each metric is tagged with the following information:

- charm-bundle

- charm-urls

- git-sha (the short SHA of the branch used to run the tests)

- juju-version

- mongo-profile

- mongo-ss-txns (true or false depending on whether server-side transactions are enabled)

- mongo-version

What scenarios are being run at the moment

The scenarios are generated from the combinations of the following set of parameters:

-

mongo version:

- 3.x

- 4.0.5 (only tested against develop as it requires the

mongodb-snapfeature flag)

-

mongo profile:

- low

- default

-

bundle:

- webscale-lxd (a percona/keystone bundle)

- cs:~jameinel/ubuntu-lite-7

There is ongoing work to add more bundles to the list so we can obtain data for different workloads. In particular:

- a full openstack bundle deployed on GUIMAAS (or some other beefy node)

- k8s (in the future)

Visualizing the data

For the purpose of visualizing the data a grafana dashboard is provided. A small caveat is that you need to login twice to access it: first visit https://cloud.kpi.canonical.com and login with the appropriate cloud-city credentials. Then you need to manually sign-in via OpenID by clicking the button in the bottom-left corner of the screen. This will let you access the webscale results dashboard.

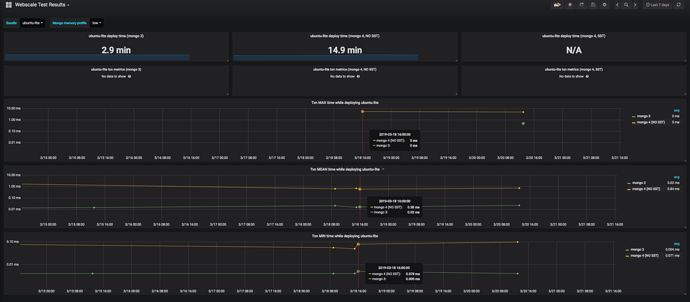

Here is how the dashboard looks like; you can use the drop-down boxes in the top row to display the captured metrics for a particular bundle and mongo profile combination. Please keep in mind that the vertical axii use a logarithmic scale.

Interesting observations so far

- It seems that when using the low mongo profile, mongo 3 consistently blows mongo 4 out of the water. Deployment times for ubuntu-lite spike from 3min with mongo 3 to 15min with mongo 4.

- Switching to the default memory profile gives almost comparable performance although mongo 3 still fares a bit better. Deployment of ubuntu-lite with mongo 4 takes about 2 minutes more than deploying with mongo 3.

Acknowledgments

Kudos to @simonrichardson for laying the groundwork for setting up and running the webscale tests!